** Edit on 11/6/2017: I hadn’t noticed before I wrote this post, but Raphael Schitz (@hypervisor_fr) beat me to the debunking! Please check out his great post on the subject as well here. **

I have been working with vSphere and VI for a long time now, and have spent the last six and a half years at VMware in the support organization. As you can imagine, I’ve encountered a great number of misconceptions from our customers but one that continually comes up is around VM virtual NIC link speed.

Every so often, I’ll hear statements like “I need 10Gbps networking from this VM, so I have no choice but to use the VMXNET3 adapter”, “I reduced the NIC link speed to throttle network traffic” and even “No wonder my VM is acting up, it’s got a 10Mbps vNIC!”

I think that VMware did a pretty good job documenting the role varying vNIC types and link speed had back in the VI 3.x and vSphere 4.0 era – back when virtualization was still a new concept to many. Today, I don’t think it’s discussed very much. People generally use the VMXNET3 adapter, see that it connects at 10Gbps and never look back. Not that the simplicity is a bad thing, but I think it’s valuable to understand how virtual networking functions in the background.

Today, I hope to debunk the VM link speed myth once and for all. Not with quoted statements from documentation, but through actual performance testing.

The Lab Setup

To accomplish said debunking, I’ll be using my favorite network benchmark tool – iperf. The main VM I’ll use for testing link speed will be a Windows Server 2008 R2 machine. I’ll also be using a pair of other Linux VMs. One will be on the same host as the Windows machine, and the other on a separate host to add physical networking into the mix. I will be running iperf 2.0.5 in both ingress and egress directions so that I can test TX and RX.

I’ll be testing the following scenarios:

- VMXNET3 at 10Gbps

- VMXNET3 at 1Gbps

- VMXNET3 at 100Mbps

- VMXNET3 at 10Mbps/Full Duplex

- VMXNET3 at 10Mbps/Half Duplex

For good measure, I’ll also do the same with the E1000 virtual NIC adapter type to see if the results are limited only to VMXNET3.

I should note that I did no special tweaking of the Windows Server 2008 R2 machine or the Linux machines. These performance numbers are in no means an attempt to extract maximum performance out of the guests. In fact, much better numbers are possible with some tweaking and better resource allocation. My testing will be to simply prove or disprove that configured VM link speed and duplex has any limiting impact on performance.

To perform the iperf test, I used the following syntax in Windows. Note that a manually specified TCP window size of 256KB is necessary for good throughput numbers in the Windows version of iperf:

C:\Users\Administrator\Desktop\iperf>iperf -c 172.16.10.151 -i 2 -t 10 -w 256K ------------------------------------------------------------ Client connecting to 172.16.10.151, TCP port 5001 TCP window size: 256 KByte ------------------------------------------------------------ [180] local 172.16.10.53 port 55892 connected with 172.16.10.151 port 5001 [ ID] Interval Transfer Bandwidth [180] 0.0- 2.0 sec 2.35 GBytes 10.1 Gbits/sec [180] 2.0- 4.0 sec 1.66 GBytes 7.13 Gbits/sec [180] 4.0- 6.0 sec 1.75 GBytes 7.52 Gbits/sec [180] 6.0- 8.0 sec 1.80 GBytes 7.72 Gbits/sec [180] 8.0-10.0 sec 1.72 GBytes 7.41 Gbits/sec [180] 0.0-10.0 sec 9.28 GBytes 7.96 Gbits/sec

A similar command syntax was used with Linux, but without the manual window size specification. This relies on the automatic window sizing that seems to do better in Linux. To keep some of the variability in check, I ran each test three times and then took the average throughput value for the results.

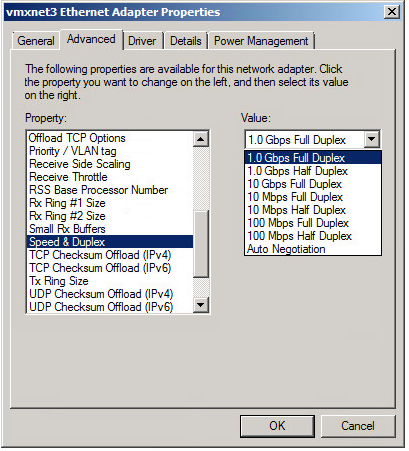

To modify the link speed, I simply changed the virtual NIC adapter properties in Windows. By default, the VMXNET3 adapter is connecting at 10Gbps on this VM. I can select from many different link speeds from the drop-down menu:

After clicking ‘OK’ and encountering a brief network disruption, the VM is back online with a 1Gbps link speed. As far as Windows is concerned, this adapter really is connected at 1Gbps now:

The Results

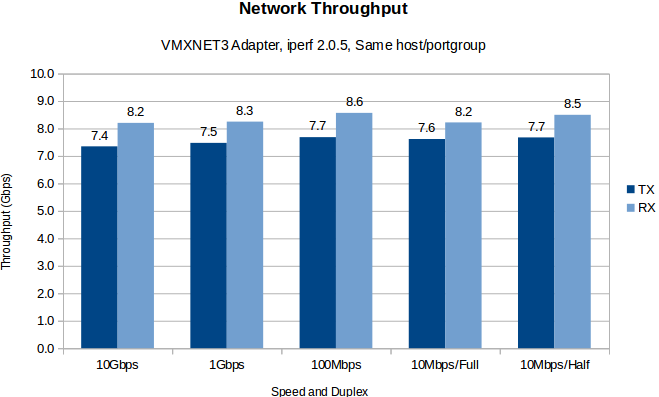

The first round of tests were done with the two VMs on the same ESXi host and same portgroup. This allowed maximum possible throughput without any physical networking limitations. As you can see below, the results are quite telling:

Clearly, configured link speed and duplex has no effect on performance. Even at 10Mbps/Half Duplex, the VM was able to transmit and receive around eight hundred times it’s configured speed!

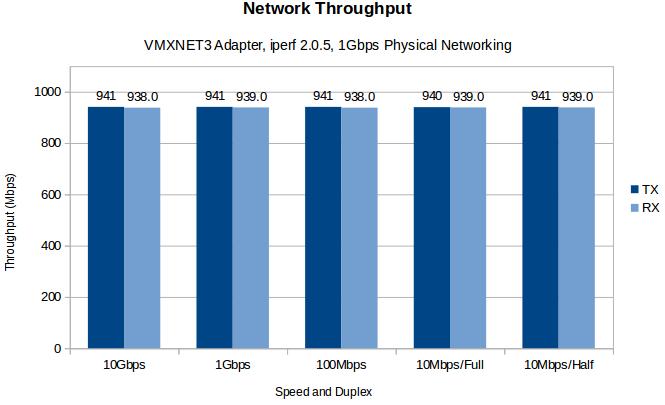

Again, this was with no physical networking playing a role here. What if we repeat the test with the two VMs on different ESXi hosts. In this scenario, the hosts are connected via 1Gbps physical adapters and switches:

Once again, we see that the despite the configured link speed, the VM could transmit and receive as fast as the hypervisor could allow. In this case, it was limited by the 1Gbps physical networking of the host and switches.

You may be thinking – “What if this is simply some wizardry with the VMXNET3 paravirtual adapter. Maybe other adapter types behave differently?” Good question – let’s have a look at the older emulated E1000 adapter type. This adapter is an emulated version of the Intel Pro/1000 MT server adapter. This particular card has a maximum configurable speed of 1Gbps – it is not a 10Gbps adapter, nor does it’s driver even support 10Gbps.

And again, we see the same pattern. Link speed – even from an adapter type that never supported 10Gbps – has no bearing at all on actual throughput and performance.

Use Cases and Limiting Throughput

At this point, it really does seem that setting link speed in a VM serves no purpose other than to tell the guest operating system what the connection speed is. I suppose it’s possible that some older software applications may be programmed to check for a specific link speed in the guest. In this case, it may be useful, but I’ve never seen such an application before.

I’ve also heard of customers attempting to artificially limit network throughput on metered circuits by reducing link speed. This is something that may work in the physical world, but clearly wouldn’t with a VM. Thankfully, even standard vSwitches support traffic shaping, where you can limit throughput. If you are trying to accomplish this, the traffic shaping feature is a fully functional way to achieve this.

Conclusion

So there you have it! Configured link speed and duplex in a guest OS has no impact whatsoever on VM network throughput.

I have to admit it was a bit painful running through all of these tests when I already knew what the outcome would be. None the less, I hope this post serves as a good reference for those looking for definitive information on the subject.

Thank you for the write-up! This is the exact type of article I was looking for! I haven’t had the chance to run the iperf tests between my FreeNAS VM and the other (Windows & Linux) VMs so this gave me some piece of mind that the reported link is not exactly true for either E1000 or VMXNET3, and can be much greater. Next up is to test an actual 10Gb direct link to my local machine.

Now you leave people wondering why there even is a vmxnet3 device and a e1000, because altough not visible in your test there is and advantage using the vmxnet3 being that if you have 2 vm’s on the same hypervisor both with the vmxnet3 your data never is going trough all the osi layers it’s just handed over between the vm’s meaning the hypervisor has less overhead (cpu) to emulate the network stack.

Thank you for this article Mike… Its crystal clear now!

If I have a VM configured with only one network adapter (one vNIC)to a vSwitch and that vSwitch has 3 physical GigE links (pNICs) to an external hardware switch, does the VM get to use all 3 Gig’s of bandwidth? or do I need to create a total of 3 network adapters (3 vNIC’s) in the VM to be able to utilize all of the 3 GigE external links?

Hi Felipe, adding additional vNICs in the guest wouldn’t be a good idea. In order to use multiple uplinks you would need to use an ether channel/port channel on the physical switch and configure your vswitch with iphash load balancing (or LACP on a dvs). This adds a lot of complexity and has some disadvantages so unless your guests really need >1gbs I’d recommend against it. Hope this helps.

Thanks for the information, the work is appreciated. I know this post is dated, but I have NEVER EVER been able to get 10 Gb/s. I have been working with VMware for a long time. I think something else is going on with your data or your scenario.

I just stood up a virgin ESXi 6.7 host, 1 port group; I put 2x Windows VMs (verified using VMXNET) in the singular port group, ran iPerf v3.1.3 (latest since 2017). I can’t get more any 2Gb throughput VM to VM. Also note that my output from iPerf does not look like your output….

C:\Users\mf\iperf-3.1.3-win64>iperf3.exe -c 192.168.210.118 -i 2 -t 10 -w 256K

Connecting to host 192.168.210.118, port 5201

[ 4] local 192.168.210.35 port 61750 connected to 192.168.210.118 port 5201

[ ID] Interval Transfer Bandwidth

[ 4] 0.00-2.00 sec 233 MBytes 977 Mbits/sec

[ 4] 2.00-4.00 sec 279 MBytes 1.17 Gbits/sec

[ 4] 4.00-6.00 sec 317 MBytes 1.33 Gbits/sec

[ 4] 6.00-8.00 sec 320 MBytes 1.34 Gbits/sec

[ 4] 8.00-10.00 sec 318 MBytes 1.34 Gbits/sec

– – – – – – – – – – – – – – – – – – – – – – – – –

[ ID] Interval Transfer Bandwidth

[ 4] 0.00-10.00 sec 1.43 GBytes 1.23 Gbits/sec sender

[ 4] 0.00-10.00 sec 1.43 GBytes 1.23 Gbits/sec receiver

iperf Done.

Same here – dating back to esxi 6.7. Even today with esxi 8 no better performance. So sad….

Great postt thanks