As we saw in the first half of scenario 6, a fictional administrator enabled the DFW in their management cluster, which caused some unexpected filtering to occur. Their vCenter Server was no longer allowed the necessary HTTPS port 443 traffic needed for the vSphere Web Client to work.

Since we can no longer manage the environment or the DFW using the UI, we’ll need to revert this change using some other method.

As mentioned previously, we are fortunate in that NSX Manager is always excluded from DFW filtering by default. This is done to protect against this very type of situation. Because the NSX management plane is still fully functional, we should – in theory – still be able to relay API based DFW calls to NSX Manager. NSX Manager will in turn be able to publish these changes to the necessary ESXi hosts.

There are two relatively easy ways to fix this that come to mind:

- Use the approach outlined in KB 2079620. This is the equivalent of doing a factory reset of the DFW ruleset via API. This will wipe out all rules and they’ll need to be recovered or recreated.

- Use an API call to disable the DFW in the management cluster. This will essentially revert the exact change the user did in the UI that started this problem.

There are other options, but above two will work to restore HTTP/HTTPS connectivity to vCenter. Once that is done, some remediation will be necessary to ensure this doesn’t happen again. Rather than picking a specific solution, I’ll go through both of them.

Option 1 – Factory Reset

I jokingly call this the ‘sledgehammer’ approach because it removes all the DFW rules defined in NSX and requires them to be re-created or recovered. I’ll discuss this option first, because it’s what VMware’s public KB articles and documentation refer to.

Note: If you have a very security sensitive deployment, this may not be a feasible solution for you. I’d recommend reaching out to VMware Support for assistance in determining the best course of action.

This can be accomplished using an API ‘DELETE’ of the global firewall configuration. The specifics are outlined on page 203 of the NSX 6.3 API guide.

DELETE https://nsxmgr/api/4.0/firewall/globalroot-0/config

After entering this into my API client, I got the following response:

It may not look like it did anything, but the 204 response code simply implies that the command was accepted and didn’t return any content. This is exactly what we should see.

Within a few seconds I was able to get back into the vSphere Web Client. Before doing anything else, I disabled the DFW in the management cluster so that we don’t lock ourselves out again.

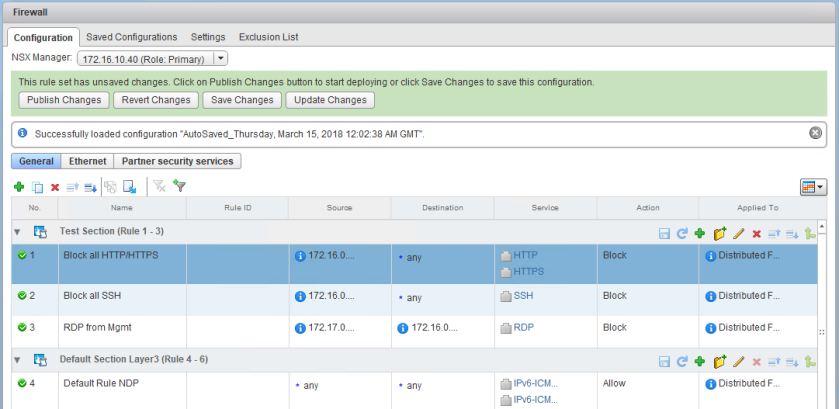

As you can see below, the DFW configuration is back to the defaults:

The section called ‘Test’ that existed previously is no longer there. Now, you could start creating your firewall rules again, but obviously this would be an arduous task if you had a lot of them. Instead, it would be much easier to load the last auto-save that was preserved during the last successful publish operation.

As you can see, I have several of them. The last auto-save I have prior to recovering is from 3/14 at 8:02PM. You can load a saved configuration from the ‘Configuration’ tab of the firewall. After a few seconds, we can see the ruleset just as it was prior to deleting the configuration via API:

It’s safe to hit ‘Publish’ at this time because we’ve already turned off the DFW in the management cluster. Now things are right back to where they started.

I’ll discuss a second, easier option and then we’ll go over cleanup and prevention steps.

Option 2 – Disabling the DFW

In this scenario, the second option would be my preference. The reason being that it will get the customer right back to where they were before things broke, and it will avoid the removal of any existing firewall rules.

Disabling the DFW for a specific cluster is straight forward using the NSX APIs. You can find this specific call on page 238 of the NSX 6.3 API guide.

PUT https://nsxmgr/api/4.0/firewall/{domainID}/enable/{truefalse}

The one piece of information we’ll need to do this is the moref identifier used by the management cluster. Since we can’t get this from vCenter Server, we’ll need to use the CLI. If you refer to my recent post on finding moref identifiers, the NSX central CLI sounds like it would be our best bet. Unfortunately, because NSX Manager tries to poll vCenter Server for cluster information over port 443, the command times out:

nsxmanager.lab.local> show cluster all Command "show cluster all" timed out after 60 seconds

In fact, any command or API call that needs to query vCenter Server will likely fail at this point because port 443 is used for this. To get the moref, we can look at cached firewall configuration on an ESXi host in the management cluster:

[root@esx0:~] vsipioctl loadruleset | head -7 Loading ruleset file: /etc/vmware/vsfwd/vsipfw_ruleset.dat ################################################## # ruleset message dump # ################################################## ActionType : replace Id : domain-c205 Name : domain-c205

As you can see above, domain-c205 is the cluster moref identifier that this host belongs to. Now that we have the required information, we can do a simple PUT API call to disable the firewall on the management cluster.

The change was successful, and I could immediately get back in to the vSphere Web Client.

The central CLI commands that need to query vCenter also work now. The below confirms that the firewall is disabled in the management cluster:

nsxmanager.lab.local> show dfw cluster all No. Cluster Name Cluster Id Datacenter Name Firewall Status Firewall Fabric Status 1 compute-a domain-c121 lab Enabled GREEN 2 management domain-c205 lab Not Enabled GREY

Now that we can get back in, we’ll need to make the necessary preventative changes.

Correcting the Rules

In discussing this situation with our fictional NSX administrator, we’ve learned that the three rules defined in the ‘Test’ section were only intended for the compute-a cluster and should not be applied to the management cluster. They’ve also told us that they enabled the firewall in the management cluster because they plan to start adding some new rules that will apply there. Ultimately, it’ll need to be enabled.

Knowing this, there are a few different options they can consider. First, they could use the compute-a cluster object as the source of all three firewall rules instead of a wide ranging IP subnet. So long as they have VMware tools or an IP detection method configured, this would work just fine. Alternatively, they could modify the ‘apply to’ field of these rules to ensure they only get pushed to VMs in the compute-a cluster. This later method would also have some performance benefits because hosts in the management cluster would not need to process any of the rules intended to compute-a.

We’ll modify the rules in the ‘Test’ section to apply only to compute-a:

Once these simple changes are made, we can re-enable the DFW in the management cluster.

If we look at the actual rules that are applied to a VM in the compute-a cluster versus what is applied to the vCenter Server, we can see a very different picture. I’ll be using the vsipioctl command as I described in the first half of this scenario:

[root@esx-a1:~] vsipioctl getrules -f nic-21477632-eth0-vmware-sfw.2

ruleset domain-c121 {

# Filter rules

rule 1017 at 1 inout protocol tcp from ip 172.16.0.0/12 to any port 80 drop with log;

rule 1017 at 2 inout protocol tcp from ip 172.16.0.0/12 to any port 443 drop with log;

rule 1016 at 3 inout protocol tcp from ip 172.16.0.0/12 to any port 22 drop;

rule 1015 at 4 inout protocol tcp from ip 172.17.0.0/16 to ip 172.16.0.0/16 port 3389 drop;

rule 1014 at 5 inout protocol ipv6-icmp icmptype 135 from any to any accept;

rule 1014 at 6 inout protocol ipv6-icmp icmptype 136 from any to any accept;

rule 1013 at 7 inout protocol udp from any to any port 67 accept;

rule 1013 at 8 inout protocol udp from any to any port 68 accept;

rule 1009 at 9 inout protocol any from any to any accept with log;

}

ruleset domain-c121_L2 {

# Filter rules

rule 1012 at 1 inout ethertype any stateless from any to any accept;

}

The above is for a windows VM in the compute-a cluster. It looks exactly like what was applied to the vCenter Server when things were broken. Now let’s look at vCenter’s ruleset. Remember, we’ve turned the DFW back on in the management cluster, so the vCenter Server should still be subject to DFW filtering.

[root@esx0:/var/log] vsipioctl getrules -f nic-69262-eth0-vmware-sfw.2

ruleset domain-c205 {

# Filter rules

rule 1014 at 1 inout protocol ipv6-icmp icmptype 135 from any to any accept;

rule 1014 at 2 inout protocol ipv6-icmp icmptype 136 from any to any accept;

rule 1013 at 3 inout protocol udp from any to any port 68 accept;

rule 1013 at 4 inout protocol udp from any to any port 67 accept;

rule 1009 at 5 inout protocol any from any to any accept with log;

}

ruleset domain-c205_L2 {

# Filter rules

rule 1012 at 1 inout ethertype any stateless from any to any accept;

}

Notice that rules 1015, 1016 and 1017 don’t exist on vCenter’s slot-2 dvFilter. That’s because the ‘applied to’ field limits these rules to the compute-a cluster.

Prevention

Quite often, you’ll see NSX deployments with dedicated management clusters where there are no NSX VIBs deployed and none of the NSX services are in use. This type of design eliminates these fundamental risks, but this may not always be feasible in smaller deployments. In some cases, you may have no choice but to have your vCenter Server and other critical VMs on the same hosts as NSX DFW protected machines. This isn’t a problem and is supported, however, best practices should be followed to protect yourself from this sort of situation.

It’s always a good idea to compile a list of virtual machines that are critical for the management of the environment. For example, in my lab, I need vCenter Server, the PSC, active directory as well as DNS services to function. If any of these are down, I’ll struggle to manage the environment.

Normally these VMs shouldn’t be using the same data path – i.e. VXLAN, DLR etc. – and are treated differently than workload machines consuming the network virtualization services. This will usually apply from a security perspective as well.

Unless you have a security requirement to apply the DFW to these critical management machines, it’s usually a good practice to just add them to the NSX DFW exclusion list. Every VM on this list is stripped of it’s slot 2 dvFilter, and is not subjected to any of the configured DFW rules.

Conclusion

I hope this was useful. Please keep the troubleshooting scenario suggestions coming! Please feel free to leave a comment below or reach out to me on Twitter (@vswitchzero)