In my previous post on the Synology DS1621+, I configured storage pools and volumes. Now that our storage is ready for use, I’ll be configuring iSCSI in my VMware vSphere lab environment.

Network Configuration

A proper network setup is the foundation for a successful iSCSI deployment. I won’t go into too much detail about vSphere network configuration in this post, but here are some general recommendations when it comes to iSCSI:

- Use a dedicated VLAN for iSCSI. Do not use it for any other purpose.

- Use a dedicated subnet and ensure it is non-routable.

- Use a dedicated VMkernel port for iSCSI in the created subnet.

- If possible, ensure you have redundant NICs configured on your hosts and storage box.

- Use a 9000 MTU if possible (more on this later)

To begin, I’ll be configuring the interface settings on the DS1621+. There are many different ways this can be done, but I only have one spare 10Gbps port currently, so I’ll forgo a proper multipathing configuration and keep things simple. That said, I’ll still configure two interfaces – a single 1Gbps interface for management, and a single 10Gbps interface in a different VLAN for exclusive iSCSI use.

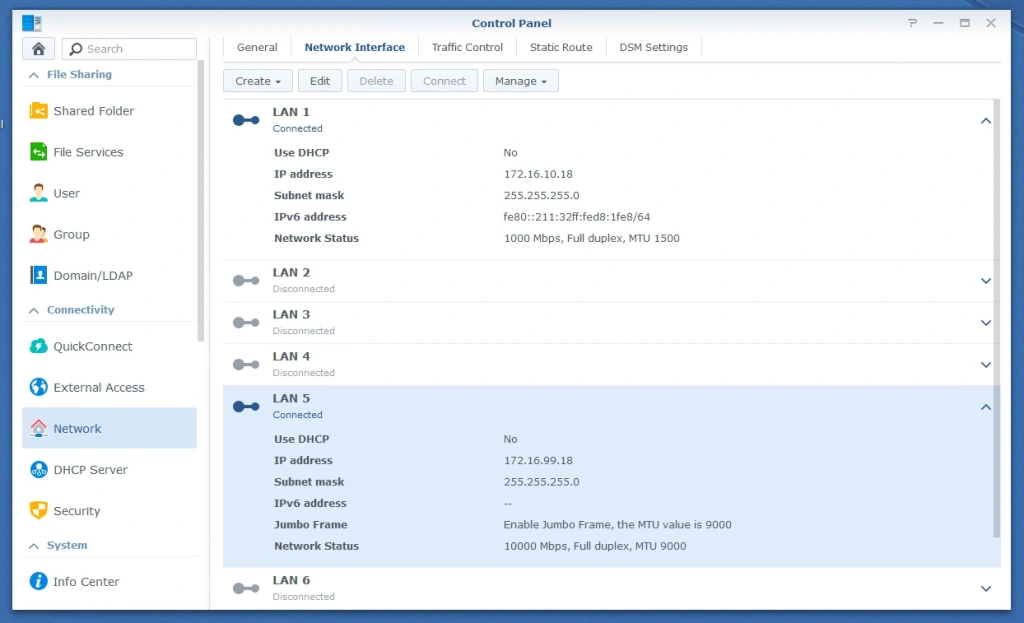

LAN 1 is a 1Gbps interface that I have in my management network (172.16.10.0/24). This interface will be used to access the Synology DSM interface and anything else that is non-iSCSI related. In my case, LAN5 and LAN6 are the 10Gbps ports on the DS1621+. The 172.16.99.0/24 network is my iSCSI network and is non-routable. Both interfaces are connected to “access” ports on my physical switch. Since there is no 802.1q VLAN tagging, VLANs are not specified on the DS1621+. I’ll show you how to restrict iSCSI to a specific interface when we configure iSCSI targets later on. Next, we’ll move on to the vSphere networking configuration.

Because I already have my TrueNAS box up and running in VLAN 99, my vSwitch and VMkernel ports are already configured correctly for iSCSI in this network. I won’t get too much into vSphere networking configuration today, but I’ll at least show you how I have things configured.

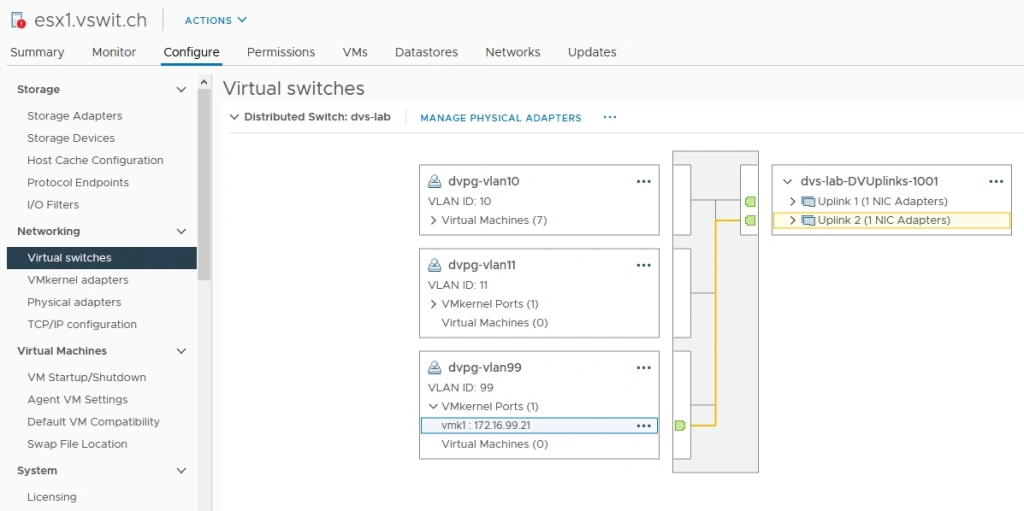

My distributed switch called “dvs-lab” has a dvPortgroup configured with a VLAN ID of 99 configured. Unlike the DS-1621+, each of the 10Gbps NICs here are connected to 802.1q VLAN tagged ports on my switch. I have the default “route based on originating port ID” teaming configured on all the dvPortgroups.

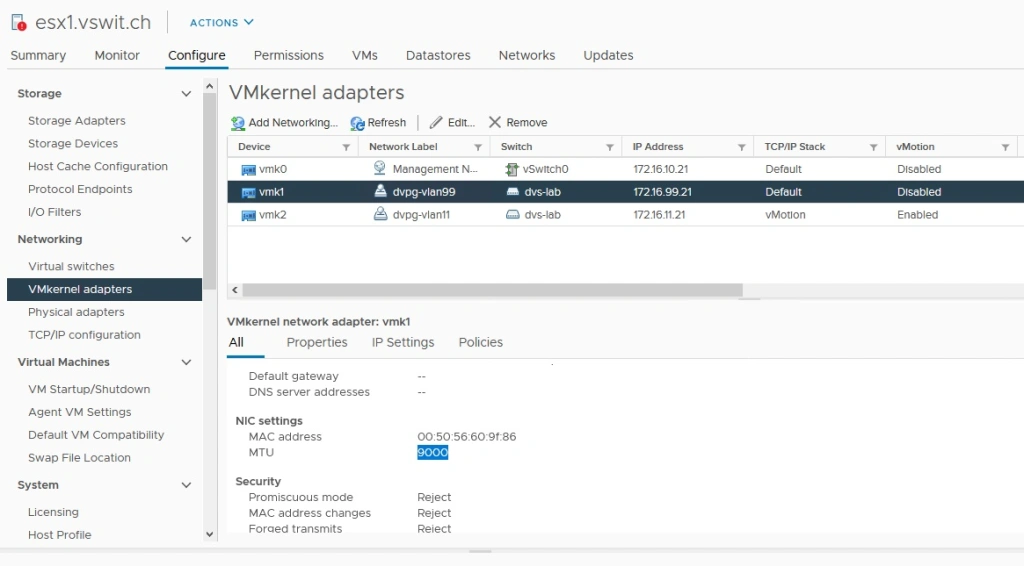

I also have a dedicated VMkernel port configured for iSCSI in the 172.16.99.0/24 network. In the example above, host esx1 uses 172.16.99.21. Note that I have not configured a gateway for this VMkernel interface because I want this network to remain non-routable. The same is true for my physical layer-3 switch – there are no VIFs attached to the VLAN.

Quick Note on Jumbo Frames

Although there are a lot of varying opinions on jumbo frames out there, I would encourage you to consider using a 9000 MTU for iSCSI. In a tightly controlled, non-routed VLAN like that used for iSCSI in a datacenter, most of the reasons to avoid large frames simply do not apply. Storage traffic tends to be very heavy and the number of frames your ESXi host will need to process with a 1500 MTU will be very high. There is overhead associated with this high packet rate. For example, with 10Gbps networking, a 1GB/s sustained transfer rate is not unreasonable. To put that into perspective, your host would have to process almost 700,000 frames and headers every second with a 1500MTU. With a 9000 MTU, that number would be a little over 100,000 – much easier to handle.

Configuring jumbo frames is outside of the scope of this post, but in my environment, I have jumbo frames configured in the following locations:

- Distributed Switch – set to 9000 MTU. This configuration passes to physical vmnics.

- VMkernel port for iSCSI – set to 9000 MTU.

- Physical switch – Jumbo frames enabled globally.

- Synology DS1621+ – Jumbo frames enabled on NET5 interface.