Welcome to part two of a series on configuring the MikroTik CRS309-1G-8S+IN for use in a VMware home lab. In part one, I discussed RouterOS and how to get VLAN trunking configured for all ports from the CLI. Today I’ll be configuring inter-VLAN routing. Keep in mind that I’m using the CRS309, but this should be applicable to the popular CRS317 and other CRS3xx switches running RouterOS as well.

As I mentioned in my unboxing and overview post, the CRS309 is a fully featured L3 switch. It uses the Marvell Prestera DX switching chip with a forwarding ASIC for line-rate L2 performance at very low power consumption levels. This is great for a home lab, because your non-routed vSAN, iSCSI and vMotion kernel traffic can take advantage of full 10Gbps line rate throughput. To use the L3 features, however, the CRS3xx switches rely on the integrated 32-bit ARM CPU cores in the Prestera DX. This means that things like inter-VLAN routing, firewalling and other L3/L4 features are done in software. MikroTik rates the switch for around 2.5Gbps for anything that will need to be processed by the CPU cores. None the less, this is still plenty of routing throughput for a home lab and I’m really happy that they include a huge number of these features even if they are somewhat performance limited.

VLAN Interface Creation

In the part one I relied on the CLI to get started. Now that we have a management IP configured, we can continue in the UI. To get inter-VLAN routing working on the CRS3xx series switches, there are two steps – first, we’ll need to create VLAN interfaces with the correct VLAN ID specified. Second, we need to assign an IP address to these interfaces.

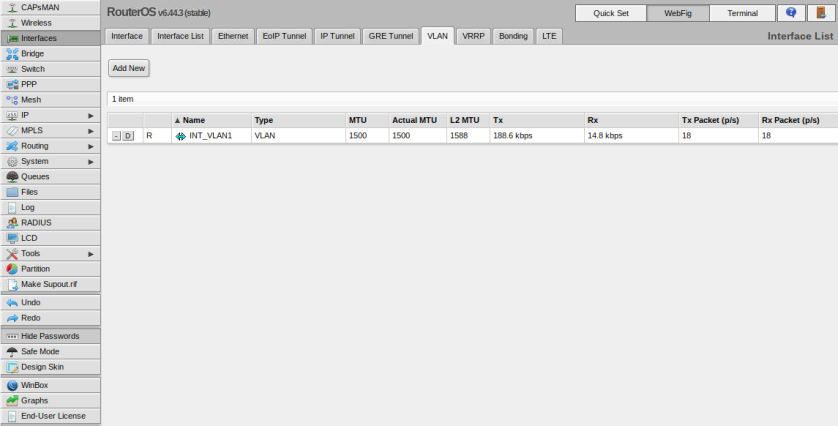

If you recall in part one, I created a VLAN interface in VLAN 1 to get management connectivity to the switch again. That interface is visible in the UI from the Interfaces/VLAN tab:

As a next step, I’ll create VLAN interfaces for the other five VLANs (11,76,98,2005 and 3156). Click the ‘Add New’ button to create a new one. I’ll start with VLAN 11 as an example.

In my case there were only three options I needed to change – name, VLAN ID and Interface. I like to give my VLAN interfaces the names INT_VLANX for consistency. Be sure to assign the VLAN interface to the bridge that you created previously so that it is accessible from all ports on the switch. I repeated this for all of my VLANs.

Noctua is an Austrian company well known for their low noise fans and high-end heatsinks. I’ve been using Noctua heatsinks for ages. In fact, I reviewed some of their original heatsinks and fans many years ago when I used to write hardware reviews. This included their original NH-U12P, the NH-C12P and the smaller NH-U9B. Back then, I praised them for their high-quality construction, near silent operation, excellent mounting hardware and most importantly – excellent cooling performance. That was over ten years ago, and it seems that Noctua is still very well respected for all the same reasons today.

Noctua is an Austrian company well known for their low noise fans and high-end heatsinks. I’ve been using Noctua heatsinks for ages. In fact, I reviewed some of their original heatsinks and fans many years ago when I used to write hardware reviews. This included their original NH-U12P, the NH-C12P and the smaller NH-U9B. Back then, I praised them for their high-quality construction, near silent operation, excellent mounting hardware and most importantly – excellent cooling performance. That was over ten years ago, and it seems that Noctua is still very well respected for all the same reasons today.