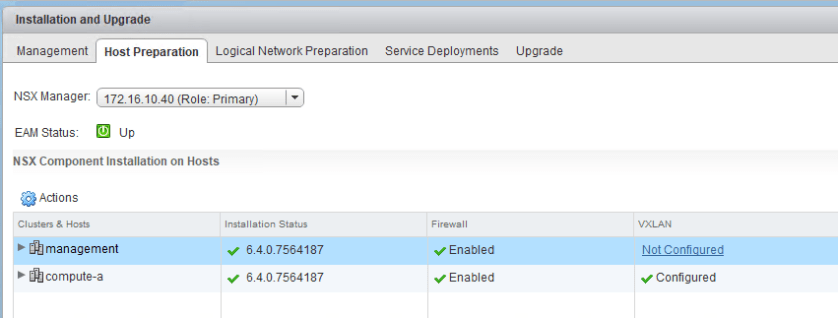

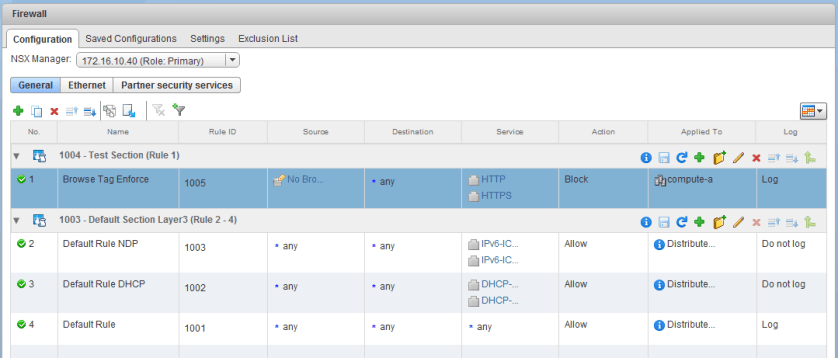

As we saw in the first half of scenario 6, a fictional administrator enabled the DFW in their management cluster, which caused some unexpected filtering to occur. Their vCenter Server was no longer allowed the necessary HTTPS port 443 traffic needed for the vSphere Web Client to work.

Since we can no longer manage the environment or the DFW using the UI, we’ll need to revert this change using some other method.

As mentioned previously, we are fortunate in that NSX Manager is always excluded from DFW filtering by default. This is done to protect against this very type of situation. Because the NSX management plane is still fully functional, we should – in theory – still be able to relay API based DFW calls to NSX Manager. NSX Manager will in turn be able to publish these changes to the necessary ESXi hosts.

There are two relatively easy ways to fix this that come to mind:

- Use the approach outlined in KB 2079620. This is the equivalent of doing a factory reset of the DFW ruleset via API. This will wipe out all rules and they’ll need to be recovered or recreated.

- Use an API call to disable the DFW in the management cluster. This will essentially revert the exact change the user did in the UI that started this problem.

There are other options, but above two will work to restore HTTP/HTTPS connectivity to vCenter. Once that is done, some remediation will be necessary to ensure this doesn’t happen again. Rather than picking a specific solution, I’ll go through both of them.

Continue reading “NSX Troubleshooting Scenario 6 – Solution”